The US scientists behind the study suggest it provides solid evidence that the problem can have a physical origin. According to the BBC (and many others)...

According to the BBC (and many others)...Libido problems 'brain not mind'

The research in question (which hasn't been published yet) has been covered very well over at The Neurocritic. Basically the authors took some women with a diagnosis of "Hypoactive Sexual Desire Disorder" (HSDD), and some normal women, put them in an fMRI scanner and showed them porn. Different areas of the brain lit up.

Scans appear to show differences in brain functioning in women with persistently low sex drives, claim researchers.

So what? For starters we have no idea if these differences are real or not because the study only had a tiny 7 normal women, although strangely, it included a full 19 women with HSDD. Maybe they had difficulty finding women with healthy appetites in Detroit?

Either way, a study is only as big as its smallest group so this was tiny. We're also not told anything about the stats they used so for all we know they could have used the kind that give you "results" if you use them on a dead fish.

But let's grant that the results are valid. This doesn't tell us anything we didn't already know. We know the women differ in their sexual responses - because that's the whole point of the study. And we know that this must be something to do with their brain, because the brain is where sexual responses, and every other mental event, happen.

So we already know that HSDD "has a physical origin", but only in the sense that everything does; being a Democrat or a Republican has a physical origin; being Christian or Muslim has a physical origin; speaking French as opposed to English has a physical origin; etc. etc. None of which is interesting or surprising in the slightest.

The point is that the fact that something is physical doesn't stop it being also psychological. Because psychology happens in the brain. Suppose you see a massive bear roaring and charging towards you, and as a result, you feel scared. The fear has a physical basis, and plenty of physical correlates like raised blood pressure, adrenaline release, etc.

But if someone asks "Why are you scared?", you would answer "Because there's a bear about to eat us", and you'd be right. Someone who came along and said, no, your anxiety is purely physical - I can measure all these physiological differences between you and a normal person - would be an idiot (and eaten).

Now sometimes anxiety is "purely physical" i.e. if you have a seizure which affects certain parts of the temporal lobe, you may experience panic and anxiety as a direct result of the abnormal brain activity. In that case the fear has a physiological cause, as well as a physiological basis.

Maybe "HSDD" has a physiological cause. I'm sure it sometimes does; it would be very weird if it didn't in some cases because physiology can cause all kinds of problems. But fMRI scans don't tell us anything about that.

Link: I've written about HSDD before in the context of flibanserin, a drug which was supposed to treat it (but didn't). Also, as always, British humour website The Daily Mash hit this one on the head...

Brain Scans Prove That The Brain Does Stuff

14.01

14.01

wsn

wsn

Posted in

bad neuroscience,

flibanserin,

fMRI,

media,

mental health,

neurofetish,

woo

Posted in

bad neuroscience,

flibanserin,

fMRI,

media,

mental health,

neurofetish,

woo

Absolutely Confabulous

13.58

13.58

wsn

wsn

Confabulation is a striking symptom of some kinds of brain damage. Patients tell often fantastic stories about things that have happened to them, or that are going on now. It's a classic sign of Korsakoff's syndrome, a disorder caused by vitamin B1 deficiency due to chronic alcoholism. Korsakoff's was memorably illustrated on House (Season 1 Episode 10, to be exact). Here's a clip; unfortunately, it's overdubbed in Russian, but you can hear the original if you pay attention.

Korsakoff's was memorably illustrated on House (Season 1 Episode 10, to be exact). Here's a clip; unfortunately, it's overdubbed in Russian, but you can hear the original if you pay attention.

Why does confabulation happen? An influential theory is that confabulation is caused by a failure to filter out irrelevant memories. Suppose I ask you to tell me what happened yesterday. As you reply, yesterday's memories will probably trigger all kinds of associations with other memories, but you'll able to recognize those as irrelevant: that wasn't yesterday, that was last week.

A confabulating patient can't do that, this theory says, so they end up with a huge jumble of memories; the confabulated stories are an attempt to make some sense of this mess. See above for my attempt to confabulate a story linking the three random concepts of a cat, a fire engine and a chair.

Now British neuroscientists Turner, Cipolotti and Shallice argue that this is only part of the truth: Spontaneous confabulation, temporal context confusion and reality monitoring. They discuss three patients, all of whom began to confabulate after suffering ruptured aneurysms of the anterior communicating artery, which destroyed parts of their ventromedial prefrontal cortex. The patient's stories are tragic, although we can take solace in the fact that they presumably don't know that. The confabulations ranged from the mildly odd:

The patient's stories are tragic, although we can take solace in the fact that they presumably don't know that. The confabulations ranged from the mildly odd:

Patient HS was a 59-year-old man admitted after being found disoriented in the street. [he] had undergone clipping of an ACoA aneurysm 25 years previously. He had been left with a profound confusional state, memory impairment, and confabulation. As a result, HS had been unable to return to work and had spent at least part of the intervening period homeless...To the surreal:

He... continued to produce spontaneous confabulations involving temporal distortions (believing that he had undergone surgery only 18 months previously) and other source memory distortions (confusing memories of interactions with the examiner with interactions with other patients).

GN was disoriented to place, situation, and time and produced consistent confabulations, for example, believing that the year was 1972 and that he was in a hospital in America after being shot. He regularly produced markedly bizarre confabulations, for example, reporting that he had attended a party the night before and met a woman with a bee’s head. He frequently attemptedAnyway, in order to try to discover the mechanism of confabulation, they gave the patients some memory tests. The results were clear: the confabulating patients had no problems remembering stuff, but were unable to tell where they remembered it from.

to act upon his mistaken beliefs, for example, attempting to leave the hospital to attend meetings.

For example, in one task, the subjects were shown a series of pictures, some of which appeared only once, and some of which were repeated. They had to say which ones were repeats.

The patients did normally the first time they did this task, but when they did the test again, this time with a different subset of pictures repeated, they ran into problems, saying pictures that appeared only once during the session were repeats. They were unable to tell the difference between repeats within the session and repeats from previous sessions. This replicates an earlier study of other confabulators.

But Turner et al found that this lack of awareness for the source of information, wasn't just limited to when things happened. The confabulating patients were also unable to tell the difference between things they'd actually heard, and things they'd only imagined.

But Turner et al found that this lack of awareness for the source of information, wasn't just limited to when things happened. The confabulating patients were also unable to tell the difference between things they'd actually heard, and things they'd only imagined.Subjects were read a list of 15 words, and also told to silently imagine 15 other words (e.g. "imagine a fruit beginning with A" - apple). They were later asked to remember the words and to say whether they were heard or just imagined. Patients did well on the task except that they wrongly said that they'd actually heard many of the imagined words.

The authors conclude that confabulation is caused by a failure to recognize the source of memories, not just in terms of time, but in terms of whether they were real or fantasy. For a confabulator, all memories are of equal importance. Why this happens as a result of damage to certain parts of the brain remains, however, a mystery.

Posted in

mental health,

papers,

vmPFC

Posted in

mental health,

papers,

vmPFC

Sex and Money on the Brain

06.00

06.00

wsn

wsn

Back in 1991, Mark Knopfler sang

Back in 1991, Mark Knopfler sang

"Sex and money are my major kicksNow twenty years later a team of French neuroscientists have followed up on this observation with a neuroimaging study: The Architecture of Reward Value Coding in the Human Orbitofrontal Cortex.

Get me in a fight I like the dirty tricks"

Sescousse et al note that people like erotic stimuli, i.e. porn, and they also like money. However, there's a difference: porn is, probably, a more "primitive" kind of rewarding stimulus, given that naked people have been around for as long as there have been people, whereas money is a recent invention.

The orbitofrontal cortex (OFC) is known to respond to all kinds of rewarding stimuli, but it's been suggested that the more primitive the reward, the more likely it is to activate the evolutionarily older posterior part of the OFC, whereas abstract stimuli, like money, activate more anterior parts of that area.

While this makes intuitive sense, it's never been directly tested. So Sescousse et al took 18 heterosexual guys, put them in an fMRI scanner, showed them porn, and gave them money. Specifically:

Two categories (high and low intensity) of erotic pictures and monetary gains were used. Nudity being the main criteria driving the reward value of erotic stimuli, we separated them into a “low intensity” group displaying women in underwear or bathing suits and a “high intensity” group displaying naked women in an inviting posture. Each erotic picture was presented only once to avoid habituation. A similar element of surprise was introduced for the monetary rewards by randomly varying the amounts at stake: the low amounts were €1-3 and the high amounts were €10-12.You've gotta love neuroscience. Although the authors declined to provide any samples of the stimuli used.

Anyway, what happened?

Anyway, what happened?As hypothesized, monetary rewards specifically recruited the anterior lateral OFC ... In contrast, erotic rewards elicited activity specifically in the posterior part of the lateral OFC straddling [fnaar fnaar] the posterior and lateral orbital gyri. These results demonstrate a double dissociation between monetary/erotic rewards and the anterior/posterior OFC ... Among erotic-specific areas, a large cluster was also present in the medial OFC, encompassing the medial orbital gyrus, the straight [how appropriate] gyrus, and the most ventral part of the superior frontal gyrus. [immature emphasis mine]In other words, the posterior-primitive, anterior-abstract relationship did seem to hold, at least if you accept that money is more abstract than porn. (Many other areas were activated by both kinds of rewards, such as the ventral striatum, but these were less interesting as they've been identified in many previous studies.)

Overall, this is a good study, and a nice example of hypothesis-testing using fMRI, which is to be preferred to just putting people in a scanner and seeing which parts of the brain light up in a purely exploratory manner...

Shock and Cure - With Magnets

14.20

14.20

wsn

wsn

Electroconvulsive therapy (ECT) is the oldest treatment in psychiatry that's still in use today. ECT uses a brief electrical current to induce a generalized seizure. No-one knows why, but in many cases this rapidly alleviates depression - amongst other things.

Electroconvulsive therapy (ECT) is the oldest treatment in psychiatry that's still in use today. ECT uses a brief electrical current to induce a generalized seizure. No-one knows why, but in many cases this rapidly alleviates depression - amongst other things.

The problem with ECT is that it may cause memory loss. It's hotly debated how serious of a problem this is, and most psychiatrists agree that the risk is justified if the alternative is untreatable illness, but it's fair to say that whether or not it's not as bad as some people believe, the fear that it might be, is the main limitation to the use of the treatment.

Wouldn't it be handy if there was a way of getting the benefits of ECT without the risk of side effects? To that end, people have tried tinkering with the specifics of the electrical stimulation - the frequency and waveform of the current, the location of the electrodes, etc. - but unfortunately it seems like the settings that work best, tend to be the ones with the most side effects.

Enter magnetic seizure therapy (MST). As the name suggests, this is like ECT, except it uses powerful magnets, instead of electrical current, to cause the seizures. In fact though, the magnets work by creating electrical currents in the brain by electromagnetic induction, so it's not entirely different.

MST is thought to be more selective than ECT, in that it induces seizures in the surface of the brain - the cerebral cortex - but not the hippocampus, and other structures buried deeper in the brain, which are involved in memory.

It was first proposed in 2001, and since then it's been tested in a number of very small trials in monkeys and people. Now a group of German psychiatrists say that it's as effective as ECT, but with fewer side effects, in a new trial of 20 severely depressed people. Ironically, they work on Sigmund Freud Street, Bonn. I am not sure what Freud would say about this.

The trial was randomized, but not blinded: it's hard to blind people to this because the equipment used looks completely different. Nor was there a placebo group. All the patients had failed to improve with multiple antidepressants, and psychotherapy in almost all cases, and were therefore eligible for ECT. If anything, the MST group were slightly more ill than the ECT group at baseline.

The ECT they used was right unilateral. This is probably not quite as effective as stimulation which targets both sides of the brain (bitemporal or bifrontal), but has fewer side-effects. So what happened? After 12 sessions, MST and ECT both seemed to work, and they were equally effective on average. Some patients got much better, some only got a bit better.

So what happened? After 12 sessions, MST and ECT both seemed to work, and they were equally effective on average. Some patients got much better, some only got a bit better.

What about side effects? MST was noticeably "gentler", in that it didn't cause headaches or muscle pain, and people recovered from the seizures much faster (2 minutes vs 8 minutes to reorientation) after MST. This may have been because the seizures (as assessed using EEG) were less intense.

In terms of the all-important memory and cognitive side effects, however, it's not clear what was going on. They used a whole bunch of neuropsychological tests. In some of them, people got worse over the course of the sessions. In others, they got better. But in several, the scores went up and down with no meaningful pattern. If anything the MST group seemed to do a bit better but to be honest it's impossible to tell because there's so much data and it's so messy.

Unfortunately the tests they used have been criticized for not picking up the kinds of memory problems that some ECT patients complain of e.g. the "wiping" of old memories. For some reason they didn't just ask people whether they felt their memory was damaged or not.

Overall, this trial confirms that MST is a promising idea, but it remains to be seen whether it has any meaningful advantages over old school shock therapy...![]() Kayser S, Bewernick BH, Grubert C, Hadrysiewicz BL, Axmacher N, & Schlaepfer TE (2010). Antidepressant effects, of magnetic seizure therapy and electroconvulsive therapy, in treatment-resistant depression. Journal of psychiatric research PMID: 20951997

Kayser S, Bewernick BH, Grubert C, Hadrysiewicz BL, Axmacher N, & Schlaepfer TE (2010). Antidepressant effects, of magnetic seizure therapy and electroconvulsive therapy, in treatment-resistant depression. Journal of psychiatric research PMID: 20951997

Posted in

antidepressants,

freud,

mental health,

papers

Posted in

antidepressants,

freud,

mental health,

papers

Worst. Antidepressant. Ever.

04.22

04.22

wsn

wsn

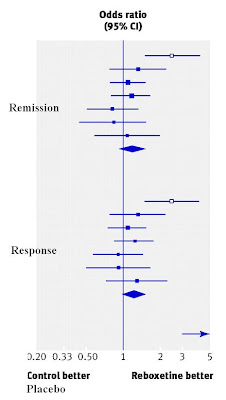

Reboxetine is an antidepressant. Except it's not, because it doesn't treat depression.

Reboxetine was introduced to some fanfare, because its mechanism of action is unique - it's a selective norepinephrine reuptake inhibitor (NRI), which has no effect on serotonin, unlike Prozac and other newer antidepressants. Several older tricyclic antidepressants were NRIs, but they weren't selective because they also blocked a shed-load of receptors.

So in theory reboxetine treats depression while avoiding the side effects of other drugs, but last year, Cipriani et al in a headline-grabbing meta-analysis concluded that in fact it's the exact opposite: reboxetine was the least effective new antidepressant, and was also one of the worst in terms of side effects. Oh dear.

And that was only based on the published data. It turns out that Pfizer, the manufacturers of reboxetine, had chosen to not publish the results of most of their clinical trials of the drug, because the data showed that it was crap.

The new BMJ paper includes these unpublished results - it took an inordinate amount of time and pressure to make Pfizer agree to share them, but they eventually did - and we learn that reboxetine is:

- no more effective than a placebo at treating depression.

- less effective than SSRIs, which incidentally are better than placebo in this dataset (a bit).

- worse tolerated than most SSRIs, and much worse tolerated than placebo.

Claims that reboxetine is dangerous on the basis of this study are a bit misleading - it may be, but there was no evidence for that in these data. It caused nasty and annoying side-effects, but that's not the same thing, because if you don't like side-effects, you could just stop taking it (which is what many people in these trials did).

Claims that reboxetine is dangerous on the basis of this study are a bit misleading - it may be, but there was no evidence for that in these data. It caused nasty and annoying side-effects, but that's not the same thing, because if you don't like side-effects, you could just stop taking it (which is what many people in these trials did).Anyway, what are the lessons of this sorry tale, beyond reboxetine being rubbish? The main one is: we have to start forcing drug companies and other researchers to publish the results of clinical trials, whatever the results are. I've discussed this previously and suggested one possible way of doing that.

The situation regarding publication bias is far better than it was 10 years ago, thanks to initiatives such as clinicaltrials.gov; almost all of the reboxetine trials were completed before the year 2000; if they were run today, it would have been much harder to hide them, but still not impossible, especially in Europe. We need to make it impossible, everywhere, now.

The other implication is, ironically, good news for antidepressants - well, except reboxetine. The existence of reboxetine, a drug which has lots of side effects, but doesn't work, is evidence against the theory (put forward by Joanna Moncrieff, Irving Kirsch and others) that even the antidepressants that do seem to work, only work because of active placebo effects driven by their side effects.

So given that reboxetine had more side effects than SSRIs, it ought to have worked better, but actually it worked worse. This is by no means the nail in the coffin of the active placebo hypothesis but it is, to my mind, quite convincing.

Link: This study also blogged by Good, Bad and Bogus.

Posted in

antidepressants,

bad neuroscience,

drugs,

mental health,

papers,

science

Posted in

antidepressants,

bad neuroscience,

drugs,

mental health,

papers,

science

Cannabinoids in Huntington's Disease

05.10

05.10

wsn

wsn

Two recent papers have provided strong evidence that the brain's endocannabinoid system is dysfunctional in Huntington's Disease, paving the way to possible new treatments. Huntington's Disease is a genetic neurological disorder. Symptoms generally appear around age 40, and progress gradually from subtle movement abnormalities to dementia and complete loss of motor control. It's incurable, although medication can mask some of the symptoms. Singer Woodie Guthrie is perhaps the disease's best known victim: he ended his days in a mental institution.

Huntington's Disease is a genetic neurological disorder. Symptoms generally appear around age 40, and progress gradually from subtle movement abnormalities to dementia and complete loss of motor control. It's incurable, although medication can mask some of the symptoms. Singer Woodie Guthrie is perhaps the disease's best known victim: he ended his days in a mental institution.

The biology of Huntington's is only partially understood. It's caused by mutations in the huntingtin gene, which lead to the build-up of damaging proteins in brain cells, especially in the striatum. But exactly how this produces symptoms is unclear.

The two new papers show that cannabinoids play an important role. First off, Van Laere et al used PET imaging to measure levels of CB1 receptors in the brain of patients in various stages of Huntington's. CB1 is the main cannabinoid receptor in the brain; it responds to natural endocannabinoid neurotransmitters, and also to THC, the active ingredient in marijuana.

They found serious reductions in all areas of the brain compared to healthy people, and interestingly, the loss of CB1 receptors occurred early in the course of the disease:

They found that Huntington's mice who also had a mutation eliminating the CB1 receptor suffered more severe symptoms, which appeared earlier, and progressed faster. This suggests that CB1 plays a neuroprotective role, which is consistent with lots of earlier studies in other disorders.

If so, drugs that activate CB1 - like THC - might be able to slow down the progression of the disease, and indeed it did: Huntington's mice given THC injections stayed healthier for longer, although they eventually succumbed to the disease. Further experiments showed that mutant huntingtin switches off expression of the CB1 receptor gene, explaining the loss of CB1.

This graph shows performance on the RotaRod test of co-ordination: mice with Huntington's (R6/2) got worse and worse starting at 6 weeks of age (white bars), but THC slowed down the decline (black bars). The story was similar for other symptoms, and for the neural damage seen in the disease.

Altogether, these results support the notion that downregulation of type 1 cannabinoid receptors is a key pathogenic event in Huntington’s disease, and suggest that activation of these receptors in patients with Huntington’s disease may attenuate disease progression.Now, this doesn't mean people with Huntington's should be heading out to buy Bob Marley posters and bongs just yet. For one thing, Huntington's disease often causes psychiatric symptoms, including depression and psychosis. Cannabis use has been linked to psychosis fairly convincingly, so marijuana might make those symptoms worse.

Still, it's very promising. In particular, it will be interesting to try out next-generation endocannabinoid boosting drugs, such as FAAH inhibitors, which block the breakdown of anandamide, one of the most important endocannabinoids.

In animals FAAH inhibitors have pain relieving, anti-anxiety, and other beneficial effects, but they don't cause the same behavioural disruptions that THC does. This suggests that they wouldn't get people high, either, but there's no published data on what they do in humans yet...

Blázquez C, et al. (2010). Loss of striatal type 1 cannabinoid receptors is a key pathogenic factor in Huntington's disease. Brain : a journal of neurology PMID: 20929960

Posted in

animals,

CNR1,

drugs,

mental health,

papers

Posted in

animals,

CNR1,

drugs,

mental health,

papers

In Dreams

02.20

02.20

wsn

wsn

Freud's The Interpretation of Dreams is a very long book but the essential theory is very simple: dreams are thoughts. While dreaming, we are thinking about stuff, in exactly the same way as we do when awake. The difference is that the original thoughts rarely appear as such, they are transformed into weird images.

Freud's The Interpretation of Dreams is a very long book but the essential theory is very simple: dreams are thoughts. While dreaming, we are thinking about stuff, in exactly the same way as we do when awake. The difference is that the original thoughts rarely appear as such, they are transformed into weird images.

Only emotions survived unaltered. A thought about how you're angry at your boss for not giving you a raise might become a dream where you're a cop angrily chasing a bank robber, but not into one where you're a bank robber happily counting his loot. By interpreting the meaning of dreams, the psychoanalyst could work out what the patient really felt or wanted.

The problem of course is that it's easy to make up "interpretations" that follows this rule, whatever the dream. If you did dream that you were happily counting your cash after failing to get a raise, Freud could simply say that your dream was wish-fulfilment - you were dreaming of what you wanted to happen, getting the raise.

But hang on, maybe you didn't want the raise, and you were happy not to get it, because it supported your desire to quit that crappy job and find a better one...

Despite all that, since reading Freud I've found myself paying more attention to my dreams (once you start it's hard to stop) and I've found that his rule does ring true: emotions in dreams are "real", and sometimes they can be important reminders of what you really feel about something.

Most of my dreams have no emotions: I see and hear stuff, but feel very little. But sometimes, maybe one time in ten, they are accompanied by emotions, often very strong ones. These always seem linked to the content of the dream, rather than just being random brain activity: I can't think of a dream in which I was scared of something that I wouldn't normally be scared of, for example.

Generally my dreams have little to do with my real life, but those that do are often the most emotional ones, and it's these that I think provide insights. For example, I've had several dreams in the past six months about running; in every case, they were very happy ones.

Until several months ago I was a keen runner but I've let this slip and got out of shape since. While awake, I've regretted this, a bit, but it wasn't until I reflected on my dreams that I realized how important running was to me and how much I regret giving it up.

While awake, we're always thinking about things on multiple levels: we don't just want X, we think "I want X" (not the same thing), and then we go on to wonder "But should I want X?", "Why do I want X?", "What about Y, would that be better?", etc. Thoughts get piled up on top of one another: it's all very cluttered.

In a dream, most of the layers go silent, and the underlying feeling comes closer to the surface. The principle is the same, in many ways, as this.

But how do I know that feelings in dreams are the "real" ones? In most respects, dreams are less real than waking stuff: we dream about all kinds of crazy stuff. And even if we accept that dreams offer a window into our "underlying" feelings, who's to say that deeper is better or more real?

Well, "buried" feelings matter whenever they're not really buried. If a desire was somehow "repressed" to the point of having no influence at all, it might as well not exist. But my feelings about running were not unconscious as such - I was aware of them before I had these dreams - but I was "repressing" them, not in any mysterious sense, but just in terms of telling myself that it wasn't a big deal, I'd start again soon, I didn't have time, etc. The problem was that this "repression" was annoying, it was causing long-term frustration etc. In dreams, all of these mild emotions spanning several months were compressed into powerful feelings for the duration of the dream (a few minutes, although the dreams "felt like" they lasted hours).

The problem was that this "repression" was annoying, it was causing long-term frustration etc. In dreams, all of these mild emotions spanning several months were compressed into powerful feelings for the duration of the dream (a few minutes, although the dreams "felt like" they lasted hours).

Overall, I don't think it's possible or useful to interpret dreams as metaphorical representations in a Freudian sense (a train going into a tunnel = sex, or whatever). I suspect that dreams are more or less random activity in the visual and memory areas of the brain. But that doesn't mean they're meaningless: they're activity in your brain, so they can tell you about what you think and feel.

Posted in

books,

freud,

mental health,

you

Posted in

books,

freud,

mental health,

you

Israel and Palestine are Both Fighting Back...?

05.30

05.30

wsn

wsn

- Those evil Israelis are out to destroy Palestine, and the Palestinians are just fighting back.

- Those evil Palestinians are out to destroy Israel, and the Israelis are just fighting back.

- It's a cycle of violence, where both sides are fighting back against the other.

Wouldn't it be handy if science could provide an answer? According to the authors of a new paper in Proceedings of the National Academy of Science, the "cycle" school is right: both sides are fighting back against the other: Both sides retaliate in the Israeli-Palestinian conflict.

The authors (from Switzerland, Israel and the USA) took data on daily fatalities on both sides, and also of daily launches of Palestinian "Qassam" rockets at Israel. The data run from 2001, the start of the current round of unpleasantness, to late 2008, the Gaza War.

They looked to see whether the number of events that happened on a certain day predicted the number of events caused by the other side on the following days, i.e. whether a Palestinian death caused the Palestinians to retaliate by firing more rockets and killing more Israelis, and vice versa.

What happened? They found that both sides were more likely to launch attacks on the days following a death on their own side. The exception to this rule was that Israel did not noticeably retaliate against Qassam launches. This is perhaps because Qassams are so ineffective: out of 3,645 recorded launches, they killed 15 people.

These graphs show the number of "extra" actions on the days following a event, averaged over the whole 8 years, according to a statistical method called the Impulse Response Function. Note that the absolute size of the effects is larger for the Israeli retaliations (the Y axis is bigger); there were a total of 4,874 Palestinian fatalities and 1,062 Israeli fatalities

These graphs show the number of "extra" actions on the days following a event, averaged over the whole 8 years, according to a statistical method called the Impulse Response Function. Note that the absolute size of the effects is larger for the Israeli retaliations (the Y axis is bigger); there were a total of 4,874 Palestinian fatalities and 1,062 Israeli fatalitiesThe authors then used another method called Vector Autoregression to discover more about the relationship. In theory, this method controls for the past history of actions by a given side, so that it reveals the number of actions independently caused by the opposing side.

the number of Qassams fired increases by 6% on the first day after a single killing of a Palestinian by Israel; the probability of any Qassams being fired increases by 11%; and the probability of any Israelis being killed by Palestinians increases by 10%. Conversely, 1 day after the killing of a single Israeli by Palestinians, the number of Palestinians killed by Israel increases by 9%, and the probability of any Palestinians being killed increases by 20%What are we to make of this? This is a good paper as far as it goes, and it casts doubt on earlier analyses finding that Israel is retaliating against Palestinians but not vice versa. However, the inherent problem with all of this research (beyond the fact that it's all based on correlations and can only indirectly imply causation), is that it focuses on individual acts of violence. The authors say, citing surveys, that

....retaliation accounts for a larger fraction of Palestinian compared with Israeli aggression: in the levels specification, 10% of all Qassam rockets can be attributed to prior Israeli attacks on Palestinians, but only 4% of killings of Palestinians by Israel can be attributed to prior Palestinian attacks on Israel.... 6% of all days on which Palestinians attack Israel with rockets, and 5% of all days on which they attack by killing Israelis, can be attributed to retaliation; in contrast, this is true for only 2% of all days on which Israel kills Palestinians.

Over one half of Israelis and three quarters of Palestinians think the other side seeks to take over their land. When accounting for their own acts of aggression, Israelis often claim to be merely responding to Palestinian violence, and Palestinians often see themselves as simply reacting to Israeli violence.But I don't think many Israelis would argue that the IDF only kills individual Palestinians as a reflex reaction to a particular attack. They're claiming that the whole conflict is a defensive one, that the Palestinians are the aggressors, but that doesn't rule out their taking the initiative on a tactical level e.g. in destroying Palestinian military capabilities before they have a chance to attack. And vice versa on the other side.

WW2 was a war of aggression by the Axis powers, but that doesn't mean that the Allies only killed Axis soldiers after they'd attacked a certain place. The Allies were on the offensive for the second half of the war, and eventually invaded the Axis's own homelands, but it was still a defensive war, because the Axis were responsible for it.

For Israel and for Palestine, the other guys are to blame for the whole thing. Who's right, if anyone, is fundamentally a historical, political and ethical question, that can't be answered by looking at day-to-day variations in who's shooting when.

Comment Policy: Please only comment if you've got something to say about this paper, or related research. Comments that are just making the case for or against Israel will get deleted.

Posted in

controversiology,

media,

papers,

politics

Posted in

controversiology,

media,

papers,

politics

How To Sell An Idea

02.35

02.35

wsn

wsn

You've got an idea: a new way of doing things; a change; a paradigm shift. It might work, it might be no better than what we've got already, or it might end up being a disaster. The honest way to present your proposal would be to admit its novelty, and hence the uncertainty: this is a new idea I had, I can't promise anything, but here are my reasons for thinking it's worth a try, here are the likely costs and benefits, here are the alternatives.

The honest way to present your proposal would be to admit its novelty, and hence the uncertainty: this is a new idea I had, I can't promise anything, but here are my reasons for thinking it's worth a try, here are the likely costs and benefits, here are the alternatives.

However, let's suppose you don't want to do that. That's hard work, and if your idea is crap, people could tell. How else could you convince them? By making it seem as though it's not a new idea at all.

You could dress your idea up as:

- the glorious past. Your idea is nothing more than how we did things back in the golden age, when everything was great. For some reason, people strayed from the true path, and things went bad. We should go back to the the good old days. It worked then, so it'll work now. You'll use words like: restoring, reviving, regaining, renewing... "re" is your friend.

- the next step. Your idea is just the logical progression of what we're already doing. Things used to be bad, and then they started to change, and get better. Let's make them even better, by doing more of the same. It's inevitable, anyway: you can't stop history. You'll use words like: progress, forward, advance, build, grow...

- catching up. You're just saying we should bring stuff into line with the way things are done elsewhere, which as we know, is working well. It's not even a matter of moving forward, so much as keeping up. It would be weird not to change. We don't want to be dinosaurs. You'll use words like: modernization, rationalization, reform...

- keeping things the same. Things are fine right now, and don't need improving. But in order for things to stay great, we must adapt to changing circumstances, so we'll have to make a few adjustments, but don't worry, fundamentally things are going to stay just as they are. You'll use words like: preserving, maintaining, protecting, upholding, strengthening...

Of course, there are plenty of changes that really are these things, to various degrees. Sometimes the past was glorious, relatively speaking (France 1942 springs to mind); sometimes we do need to catch up.

But every new idea still has an element of risk. Nothing has ever been tried and tested in the exact circumstances that we face now, because those circumstances have never existed before. Just because it worked before, or elsewhere, in a situation that we think is similar, is no guarantee. There are only degrees of certainty.

This doesn't mean we can't decide what to do, or that we shouldn't change anything. Not changing things is a plan of action in itself, anyway. The point is that we ought to be willing to try stuff that might not work, our guide to what's likely to happen being the evidence on what's worked before, critically appraised. "I don't know" is not a dirty phrase.

Posted in

controversiology,

media,

politics

Posted in

controversiology,

media,

politics

Genes for ADHD, eh?

02.10

02.10

wsn

wsn

The first direct evidence of a genetic link to attention-deficit hyperactivity disorder has been found, a study says.Wow! That's the headline. What's the real story?

The research was published in The Lancet, and it's brought to you by Wilson et al from Cardiff University: Rare chromosomal deletions and duplications in attention-deficit hyperactivity disorder.

The authors looked at copy-number variations (CNVs) in 410 children with ADHD, compared to 1156 healthy controls. A CNV is simply a catch-all term for when a large chunk of DNA is either missing ("deletions") or repeated ("duplications"), compared to normal human DNA. CNVs are extremely common - we all have a handful - and recently there's been loads of interest in them as possible causes for psychiatric disorders.

What happened? Out of everyone with high quality data available, 15.6% of the ADHD kids had at least one large, rare CNV, compared to 7.5% of the controls. CNVs were especially common in children with ADHD who also suffered mental retardation (defined as having an IQ less than 70) - 36% of this group carried at least one CNV. However, the rate was still elevated in those with normal IQs (11%).

A CNV could occur anywhere in the genome, and obviously what it does depends on where it is - which genes are deleted, or duplicated. Some CNVs don't cause any problems, presumably because they don't disrupt any important stuff.

A CNV could occur anywhere in the genome, and obviously what it does depends on where it is - which genes are deleted, or duplicated. Some CNVs don't cause any problems, presumably because they don't disrupt any important stuff.The ADHD variants were very likely to affect genes which had been previously linked to either autism, or schizophrenia. In fact, no less than 6 of the ADHD kids carried the same 16p13.11 duplication, which has been found in schizophrenic patients too.

So...what does this mean? Well, the news has been full of talking heads only too willing to tell us. Pop-psychologist Oliver James was on top form - by his standards - making a comment which was reasonably sensible, and only involved one error:

Only 57 out of the 366 children with ADHD had the genetic variant supposed to be a cause of the illness. That would suggest that other factors are the main cause in the vast majority of cases. Genes hardly explain at all why some kids have ADHD and not others.Well, there was no single genetic variant, there were lots. Plus, unusual CNVs were also carried by 7% of controls, so the "extra" mutations presumably only account for 7-8%. James also accused The Lancet of "massive spin" in describing the findings. While you can see his point, given that James's own output nowadays consists mostly of a Guardian column in which he routinely over/misinterprets papers, this is a bit rich.

The authors say that

the findings allow us to refute the hypothesis that ADHD is purely a social construct, which has important clinical and social implications for affected children and their families.But they've actually proven that "ADHD" is a social construct. Yes, they've found that certain genetic variants are correlated with certain symptoms. Now we know that, say, 16p13.11-duplication-syndrome is a disease, and that its symptoms include (but aren't limited to) attention deficit and hyperactivity. But that doesn't tell us anything about all the other kids who are currently diagnosed with "ADHD", the ones who don't have that mutation.

"ADHD" is evidently an umbrella term for many different diseases, of which 16p13.11-duplication-syndrome is one. One day, when we know the causes of all cases of attention deficit and hyperactivity symptoms, the term "ADHD" will become extinct. There'll just be "X-duplication-syndrome", "Y-deletion-syndrome" and (because it's not all about genes) "Z-exposure-syndrome".

When I say that "ADHD" is a social construct, I don't mean that people with ADHD aren't ill. "Cancer" is also a social construct, a catch-all term for hundreds of diseases. The diseases are all too real, but the concept "cancer" is not necessarily a helpful one. It leads people to talk about Finding The Cure for Cancer, for example, which will never happen. A lot of cancers are already curable. One day, they might all be curable. But they'll be different cures.

So the fact that some cases of "ADHD" are caused by large rare genetic mutations, doesn't prove that the other cases are genetic. They might or might not be - for one thing, this study only looked at large mutations, affecting at least 500,000 bases. Given that even a deletion or insertion of just one base in the wrong place could completely screw up a gene, these could be just the tip of the iceberg.

But the other problem with claiming that this study shows "a genetic basis for ADHD" is that the variants overlapped with the ones that have recently been linked to autism, and schizophrenia. In other words, these genes don't so much cause ADHD, as protect against all kinds of problems, if you have the right variants.

If you don't, you might get ADHD, but you might get something else, or nothing, depending on... we don't know. Other genes and the environment, presumably. But "7% of cases of ADHD associated with mutations that also cause other stuff" wouldn't be a very good headline...

Posted in

bad neuroscience,

genes,

mental health,

oliver james,

papers,

woo

Posted in

bad neuroscience,

genes,

mental health,

oliver james,

papers,

woo